Sponsored Two years ago, Objectivity was asked to help deliver a new digital ecosystem. The client had a significant market share but wasn’t perceived as innovative or digitally-savvy. To counter this, they came up with the initiative to build a software platform which would manage their asset portfolio.

The client’s advantage was that they were close to the assets. They could easily keep them up-to-date in the system, allowing for the development of more advanced end user functionalities. Further on, the solution was supposed to integrate with third party systems and IoT sensors to process and serve even more data to users. Business-wise all this made perfect sense as “Data is the new gold”, however, the time and budget were limited.

After initial workshops with the customer, we had three months to deliver an MVP. During this period, we had to assemble a team, understand the business domain, co-create the product vision, define the architecture, and deliver a working MVP in time for a trade show.

As this was a lot to do in a short amount of time, we had to set the right priorities and agree on acceptable constraints. The result couldn’t be a mere prototype – the expectation was that if the potential client would want the product after the demo event, we should be able to roll it out in a quarter. And, to make this project just a bit more challenging – the product would have to be cloud agnostic, easily scalable, and able to handle multi-tenancy. For a technical person, this raises one important question: What technical trade-offs did we have to make in order to deliver this?

Technology considerations

Gregor Hohpe says that good architecture is like selling options. In the case of forex options, you pay now in order to be able to buy or sell at a fixed price in the future. Similarly, when it comes to software, you design a solution in a way that will give you an ‘open door’ to change some of its components without too much hassle. Sometimes you use those options (e.g. when you change the payment provider for your e-commerce), and sometimes you don’t. Do you remember the good old days, when many of us were preparing for a DB engine change, but then it never happened?

Sceptics might wonder, “How real is the vendor lock-in risk?”. Some of you probably remember when Google increased the prices of their Maps API 14x (in certain scenarios) in 2018. This proves that this threat is real!

So, how does this apply to being cloud agnostic? Is it possible to have streamlined cloud independence? Some say that “Cloud agnostic architecture is a myth,” or that “If you believe in the cloud (and its speed), you can’t be agnostic as it forces you to use the lowest common denominator of all cloud services”. The chart below shows the spectrum of available options:

In this case, cloud native means that we take advantage of a given cloud provider’s strengths (i.e. better performance, better scalability, or lower costs).

Overall, the more you invest in agnostic architecture upfront, the less it’s going to cost you to switch cloud services. However, at the same time, more complex and agnostic design will decrease productivity and slow down your delivery process. Architects are challenged to find a satisfactory optimum – a solution that’s as agnostic as possible, but which also respects the agreed time and budget scope. How can this be achieved? Well, for example, you can consider switching costs as suggested by Mark Schwartz, an Enterprise Strategist at AWS. He encourages businesses to consider:

1. The cost of leaving a cloud provider

2. The probability of the above taking place

3. The cost of mitigating cloud switch risk

Furthermore, there are multiple aspects of the solution you should consider this for, such as:

- deployment method

- hosting model

- storage

- programming language

The story continues

A cloud agnostic solution can be a blessing or a curse – it can prepare you for future success, or delay delivery. As such, the following aspects were important in our asset management scenario:

- Small to medium cost of switching clouds. We knew that we would be running a solution on Azure and IBM Cloud in the near future and that, either way, it’s rational to have some sort of cloud provider exit strategy in place, even if you don’t use it

- Small to medium upfront investment. The client wanted to avoid making a large upfront technical investment, and we understood that we would have to leave some space for functional experimentation while defining a new product. Therefore, productivity had to be medium to high

- Lastly, as unlikely as it may have been, we had to be prepared to run fully on-premise. At the time, we assumed that potential enterprise clients of our new product might set such a constraint for the platform’s installation

One of the ways to assess an application’s architecture and its variations is to use a fitness function. This concept, borrowed from evolutionary computing, is used to calculate how close a given design is to achieving a set of aims important for a given project.

Consequently, we assumed that in our scenario:

Architecture Fitness = Productivity – Upfront Investment – Cost of Switching + On-Premise Support

With that in mind, we considered the following options:

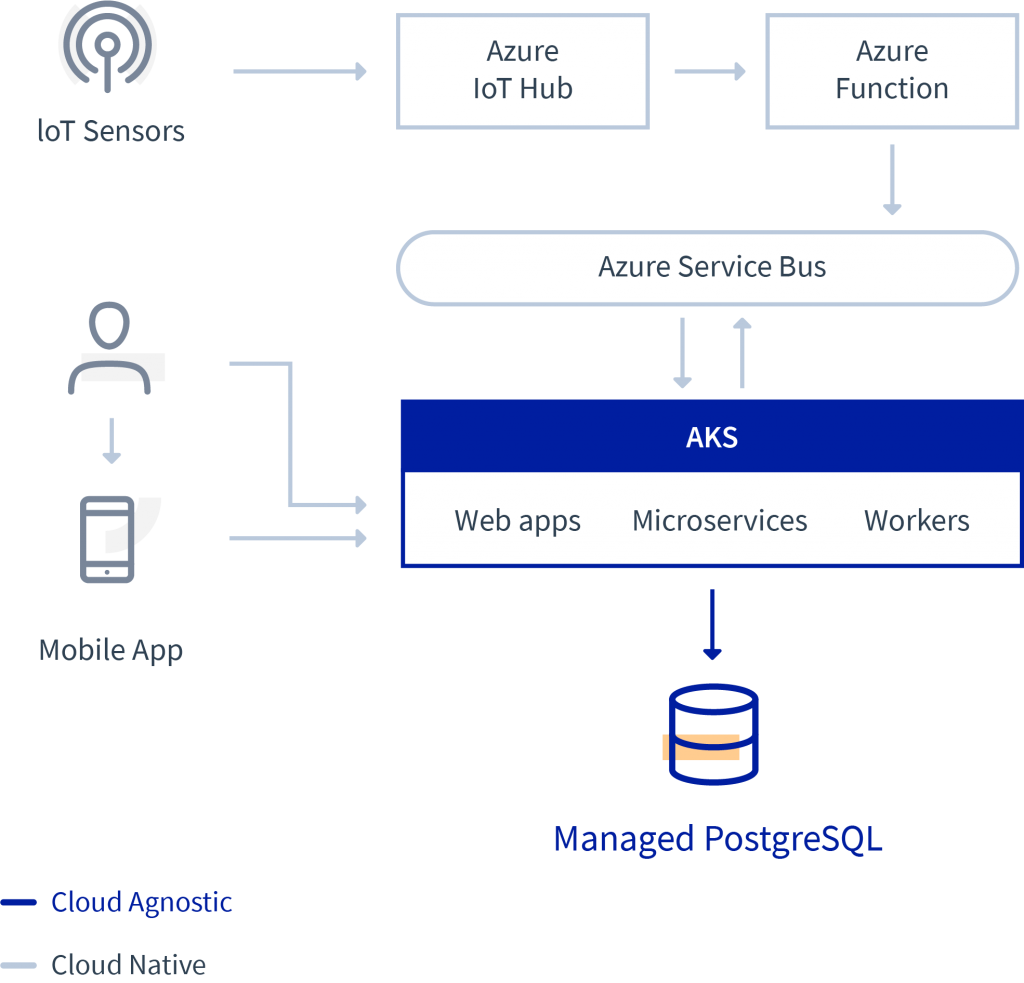

Our solution

We opted for a hybrid approach as it passed all our requirements. Plus, when it comes to containerisation within a new project, this seems to be a low-hanging fruit when you try to avoid vendor lock-in. The majority of the solution was implemented in .NET Core as a set of services and workers running inside a managed Kubernetes cluster. In order to not waste time on the configuration of persistent storage, we used Managed PostgreSQL as a common data storage for all components. Postgres is an open-source database available as a managed service in multiple clouds, plus it supports JSON documents, which was another important aspect for our platform.

Regarding the IoT integration, we selected cloud native implementation (e.g. Azure IoT Hub). In addition to being a much more scalable approach, it’s also much faster to implement. Moreover, if needed, it can be quite easily rewritten to work on another cloud. Research findings on a container-hosted IoT hub showed that there’s no solution that meets our expectations – especially when it comes to supporting two-way communication with sensors. To further minimise the cost of switching, we defined a canonical message format for domain IoT events so that only message transformation takes place outside of the Kubernetes cluster (e.g. in Azure Functions), and all the rest of the processing happens inside a cluster.

The end result

We successfully delivered a solution that runs on Azure, on time for the client’s trade show. The data storage trade-off passed the test of time. We did a few product installations and everything functions properly on both the Azure and IBM Cloud. Kubernetes also worked well. However, you should keep in mind that there are minor differences between providers. For example, the Ingress Controller is automatically installed on the IBM Cloud while, with Azure, you have to do this on your own. Additionally, Kubernetes has a different storage class for every cloud provider.

A few months after the show, we also developed a second IoT implementation using IoT Watson, which proved that the cloud native approach was a good compromise. However, you have to be aware of the differences between various queuing implementations. It’s really easy to deliver new features using Azure Service Bus, especially if you have a background in .NET (which was the case with us). However, after switching to RabbitMq, you might discover that certain queuing features (like no retry count or no message delivery delay) are not supported and, at this stage, you will have to implement them in code, which introduces unnecessary complexity. To avoid these challenges, instead of choosing what you already know for the sake of fast delivery, first stick to a more agnostic queue implementation.

Editor’s note: For more information on innovative Cloud management solutions, download Objectivity’s latest complimentary eBook: Cloud Done Right: Effective Cost Management.

Photo by Veronica Lopez on Unsplash