The short answer? Probably not. Things are ramping up quick in the AI world, which means we need to look beyond just Grok 3 and ChatGPT to see the full picture.

When Elon Musk launched Grok 3 in beta, he declared it king, hinting “this might be the last time that any AI is better than Grok”.

But just one week after Grok 3’s release, Anthropic woke up from its long nap and unleashed Claude 3.7 Sonnet, now celebrated as the top AI for coding.

What we’re really seeing is that no AI is untouchable anymore. The AI “space race” is ramping up with contender after contender. Each one is the best at something—until it’s not.

5 Key takeaways

-

Impressive benchmarks

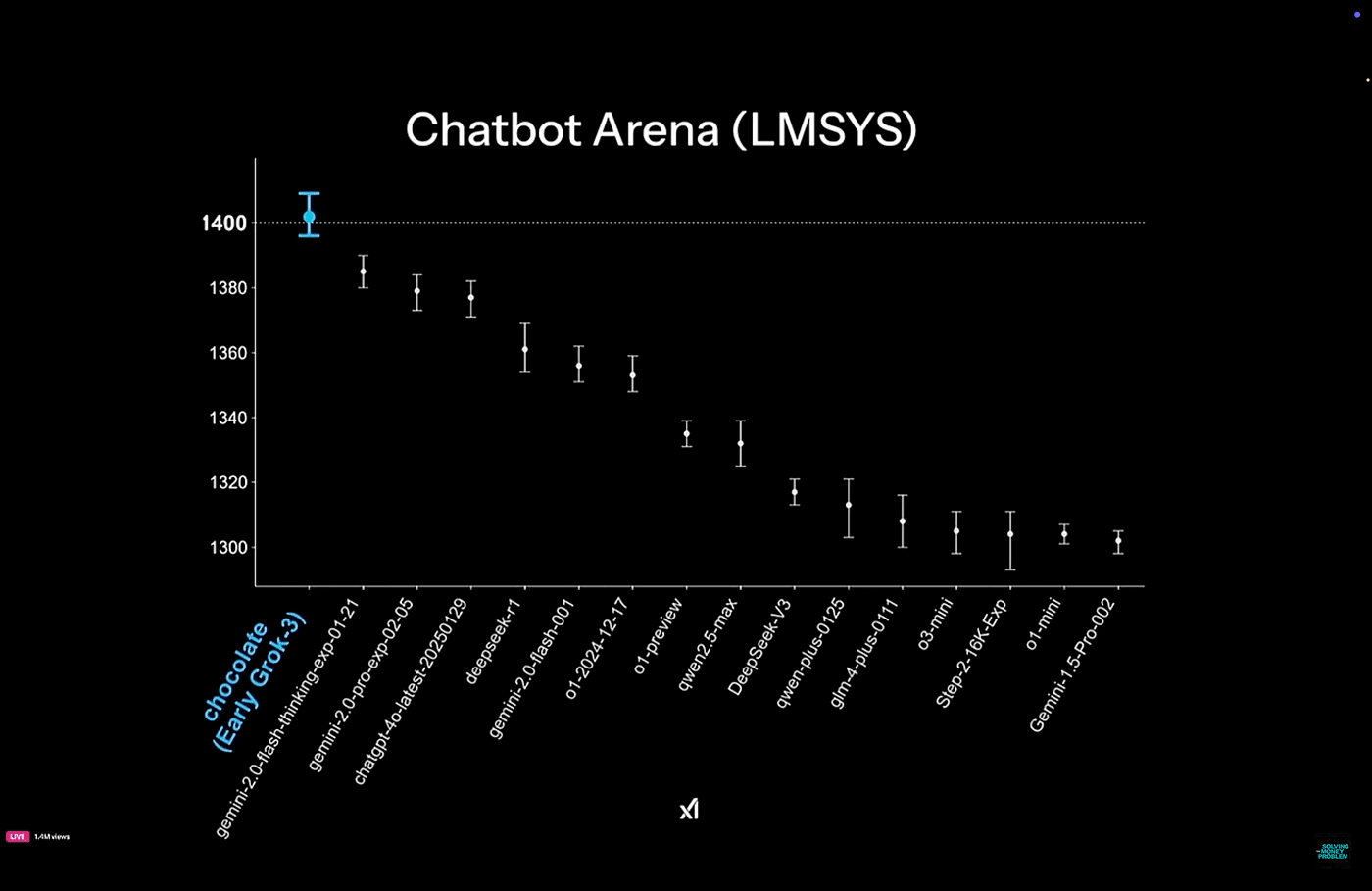

Grok 3 scores a 1400 ELO, beating ChatGPT’s o3 Mini and DeepSeek R1 in blind tests.

-

Pay-to-play model

You pay $40/month with X Premium+ or $30/month for SuperGrok to use it—no free option.

-

Speed over depth

Grok 3’s “Think mode” and DeepSearch deliver quick, punchy content and research (1,000-2,000 words), lagging behind o3’s meatier 75,000-word Deep Research outputs for in-depth SEO analysis.

-

Content creation falls flat

In tests, Grok 3 generates almost natural but basic drafts stuffed with exact-match keywords like “deepseek ai seo,” lacking the polish and depth of Claude 3.7 Sonnet’s natural, SEO-friendly prose.

-

Still improving

Grok 3, now in beta, keeps improving as Musk pushes daily updates and adds features—voice mode released one week after beta launch.

So what exactly is Grok 3, and why should SEOs and marketers care?

Grok 3 origins: Another AI titan emerges

Elon Musk’s new AI giant is his self-purportedly “scary smart” answer to the AI arms race.

Grok 3 is xAI’s successor to Grok 2, launched in beta by Musk in mid February 2025. It has roughly 15x more compute and a “thinking” mode to rival top-tier reasoning models like ChatGPT’s o3-mini, Claude’s 3.7 Sonnet, and DeepSeek’s R1. Grok 3 earned an impressive ELO score of 1400 in blind tests, flexing its capabilities in self-critique, solution verification, backtracking, and first-principles reasoning.

The name “Grok” comes from a Robert Heinlein novel “Stranger in a Strange Land”. It means to deeply and profoundly understand something—a nod to xAI’s mission to understand the universe through truth-seeking AI.

For SEOs, Grok-3’s benchmark performance and reasoning capabilities make it a handy AI tool for some SEO tasks.

We’ll pit it against top contenders like ChatGPT, Claude, and DeepSeek to see if it lives up to Musk’s claim of being “scary smart” and if it’s worth your time.

Grok 3 vs ChatGPT and other AI Models

Please note: Accurately determining AI supremacy is tricky, especially when benchmarks are set by the team behind the AI itself, so keep that in mind. Claims of being the best are often based on cherry-picked or manipulated results, and Musk’s team created the benchmarks shown below.

To showcase Grok 3’s strengths, Musk hosted a Chatbot Arena (LMSYS) showdown, pitting an early version of xAI’s top model—codenamed Chocolate—against other leading LLMs in thousands of blind tests. Its ELO score—a dynamic chess rating system now applied to AI—reached about 1400, blowing past every other contender.

Musk’s team refined Grok 3 by selecting its best pre-trained version and boosting it with reinforcement learning. This upped its reasoning capabilities and drove its high scores in blind tests.

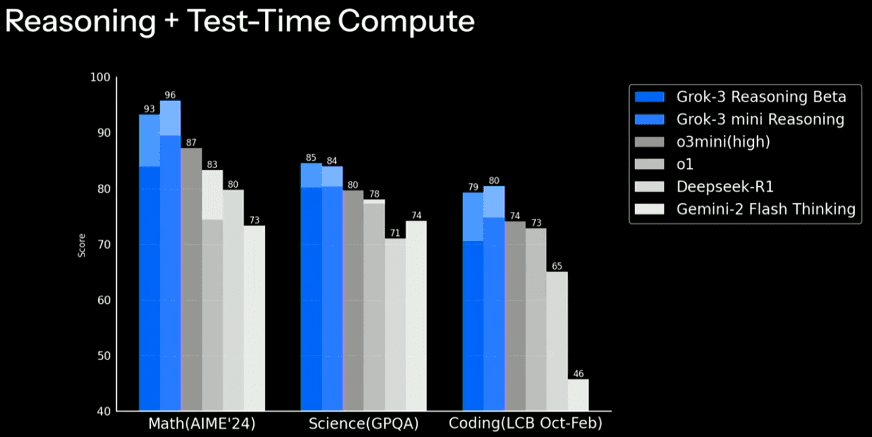

Grok 3 also outperformed competing models in reasoning and test-time computing. This took it to the top for math, science, and coding. Check the infographic below to see Grok leapfrogging major contenders like o3-mini and DeepSeek R1:

While this graph suggests that xAI has obliterated OpenAI in areas like multi-step math problem-solving and writing mid-level code, it’s misleading.

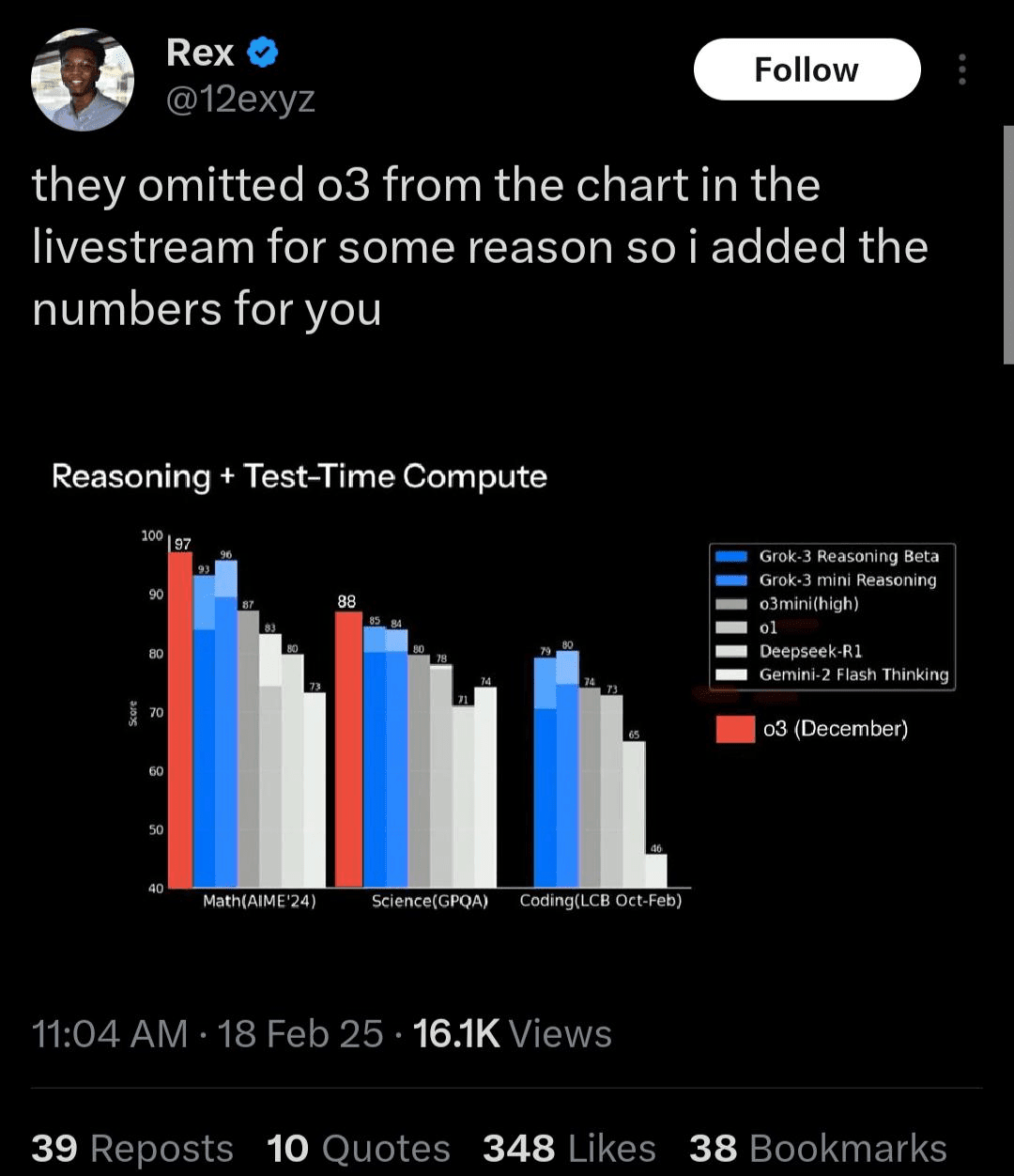

Musk conveniently left out OpenAI’s flagship reasoning model (base o3) since its benchmarks are still half-hidden. That’s a little too easy, so let’s dig into what we do know about OpenAI’s base o3 model for a more faithful comparison between xAI and OpenAI.

Grok 3 versus ChatGPT-o3

OpenAI’s o3 is most likely the top contender despite xAI’s claims of benchmark supremacy. The edited graph below shows OpenAI’s o3 model outperforming Grok 3’s reasoning skills in math and science.

This graph doesn’t show o3’s 71.7% score for coding and software engineering, but Musk’s team ran Grok 3 through a different benchmark (LCB) than OpenAI’s choice for o3 (SWE-Bench Verified). So Grok 3’s impressive 75% and 80% scores might not hold water under the same testing conditions as o3.

Disclaimer: OpenAI has only tested the complete version of o3 in experiments. It isn’t available for commercial use, except in a limited version called Deep Research. The full Deep Research version is offered exclusively to OpenAI Pro users.

OpenAI’s simulated reasoning versus Grok 3’s “Think” mode

OpenAI’s o3 uses simulated reasoning, while Grok 3 uses a simpler version dubbed ‘Think mode’. Their approaches differ in scope:

- Speed versus comprehension: OpenAI’s base o3 model uses its full reasoning capacity, but its output is slower than Grok 3’s quicker, smooth responses.

- Reasoning effort adjustment: OpenAI’s o3-mini variants let you choose from low, medium, or high reasoning effort. A Grok 3-mini variant exists, but only Grok 3 beta is currently available, and you can’t adjust its reasoning effort yet.

For digital marketers, o3 is the better option for producing meatier content. It uses its full simulated reasoning to generate detailed, well-organized, and in-depth responses. You’ll have more luck creating authoritative, contextually rich, human-like copy with it.

Meanwhile, Grok 3’s “Think mode” pushes for speed and produces punchy, ad-friendly text. It’s the better option for rapid-fire content updates or catchy headlines.

Research depth

The speed versus depth trend continues.

OpenAI’s full Deep Research feature has an incredibly high research output. Powered by the base o3 model, it can think for up to 30 minutes and give responses as long as 100,000-tokens—roughly 75,000 words. It also has a 200,000 token context window.

Grok 3 hasn’t shared details on its context window or max output tokens yet, but it has a similarly named feature, DeepSearch, that operates on a much smaller scale. Musk’s AI caps its thinking time at a few minutes, but it produces quick responses between 1000 and 2000 words.

OpenAI’s Deep Research is the winner for in-depth competitor or keyword research. The catch? There’s no way to access o3’s reports before shelling out $200 a month.

Cost-effectiveness: Adding o3-mini into the mix

If you’re on a budget and don’t need to write a thesis paper for your clients, Grok 3 is easier on the wallet. It’s also faster. But OpenAI’s o3-mini variants match up well with Grok 3 and come in $10 cheaper.

- Deep Research (aka ChatGPT-o3): $200/month Pro plan gives you max access (120 uses) to its Deep Research feature and 200K context window.

- o3-mini: $20/month Plus plan unlocks OpenAI’s o3-mini variants. You only get 10 Deep Research requests and a smaller context window, but its capabilities rival Grok’s DeepSearch in depth and range.

- Grok 3: Its DeepSearch feature is accessible by upgrading to SuperGrok on Grok.com ($30/month) or purchasing an X Premium + subscription ($40/month)

The o3-mini variants rival Grok 3 because they let you adjust the reasoning effort—low, medium, or high—to fine-tune the trade-off between speed and analytical depth.

Grok 3’s DeepSearch and “Think mode” features are fixed and favor low analytical depth, but with no usage caps.

For SEOs with room in their budget who value flexible reasoning and rich insights for detailed reports, OpenAI’s Deep Research (base o3) and its mini variants (limited Deep Research) edge out Grok 3. But Musk’s AI still has its place. Grok 3’s comparatively surface-level research feature has unlimited uses and is ideal for rapid, ad-friendly outputs.

Grok 3 versus everyone

Musk’s claim that Grok 3 is “the best AI on Earth” is untrue, but its benchmarks hold out. Musk’s AI’s capabilities stand up well against competitors like Claude 3.7 Sonnet, ChatGPT-o3, ChatGPT-o3-mini, and DeepSeek R1. This is great news for SEOs—it means more choices and competitive API token prices.

The table below shows how Grok 3 stacks up commercially against other leading AI models.

Grok 3 (Beta)

Undisclosed

Grok 3 (Mini)

Undisclosed

DeepSeek R1

$0.55 per 1M tokens

Undisclosed

GPT-o3 (Mini)

$1.10 per 1M tokens

Claude 3.7 Sonnet

$3 per 1M tokens

Grok 3 (Beta)

Undisclosed

Grok 3 (Mini)

Undisclosed

DeepSeek R1

$2.19 per 1M tokens

Undisclosed

GPT-o3 (Mini)

$4.40 per 1M tokens

Claude 3.7 Sonnet

$15 per 1M tokens

Grok 3 (Beta)

No. (Requires X Premium+ subscription ($40/month) or SuperGrok subscription ($30/month). No API access yet.)

Grok 3 (Mini)

No. (Requires X Premium+ subscription ($40/month) or SuperGrok subscription ($30/month). No API access yet.)

DeepSeek R1

Yes (app is free to use, API integration is token-based)

No (Offers 120 uses of a limited version of o3 called Deep Research to Pro users for $200/month. Plus users ($20/month) and free users get 10 uses per month). No API access yet.

GPT-o3 (Mini)

No (requires ChatGPT Plus subscription ($20/month), or OpenAI API access, with free tier limited to basic usage.)

Claude 3.7 Sonnet

Yes (app is partially free to use, with unspecified limits, and higher limits with paid plans. API integration is token-based.

Grok 3 (Beta)

2.7T parameters, trained on 200,000 Nvidia H100 GPUs, offers advanced reasoning.

Grok 3 (Mini)

Smaller, optimized for low-compute scenarios.

DeepSeek R1

Its Mixture of Experts (MoE) has 671B parameters total but uses 37B per task.

Not publicly disclosed, described as dense, high-compute reasoning using all parameters per task.

GPT-o3 (Mini)

Its Dense Transformer Architecture has ~200B parameters and uses all per task.

Claude 3.7 Sonnet

High-density architecture optimized for reasoning and context processing. Parameter count not publicly disclosed. Balances computational efficiency with analytical depth while using all parameters per task.

Undisclosed

Undisclosed

$0.55 per 1M tokens

Undisclosed

$1.10 per 1M tokens

$3 per 1M tokens

Undisclosed

Undisclosed

$2.19 per 1M tokens

Undisclosed

$4.40 per 1M tokens

$15 per 1M tokens

No. (Requires X Premium+ subscription ($40/month) or SuperGrok subscription ($30/month). No API access yet.)

No. (Requires X Premium+ subscription ($40/month) or SuperGrok subscription ($30/month). No API access yet.)

Yes (app is free to use, API integration is token-based)

No (Offers 120 uses of a limited version of o3 called Deep Research to Pro users for $200/month. Plus users ($20/month) and free users get 10 uses per month). No API access yet.

No (requires ChatGPT Plus subscription ($20/month), or OpenAI API access, with free tier limited to basic usage.)

Yes (app is partially free to use, with unspecified limits, and higher limits with paid plans. API integration is token-based.

2.7T parameters, trained on 200,000 Nvidia H100 GPUs, offers advanced reasoning.

Smaller, optimized for low-compute scenarios.

Its Mixture of Experts (MoE) has 671B parameters total but uses 37B per task.

Not publicly disclosed, described as dense, high-compute reasoning using all parameters per task.

Its Dense Transformer Architecture has ~200B parameters and uses all per task.

High-density architecture optimized for reasoning and context processing. Parameter count not publicly disclosed. Balances computational efficiency with analytical depth while using all parameters per task.

SEOs should see this chart as proof that Grok 3 is more than just another AI model entering the market. Its emergence—mirroring DeepSeek’s—is driving broader industry trends.

With its competitive “Think” mode, nifty “DeepSearch” feature, and affordable pricing, Grok 3 shows that AI is becoming more dynamic and cost-effective.

But where does Grok 3 stand on a practical level? Let’s move beyond the theoretical and see how Grok 3 performs on SEO tasks compared to its rivals.

Real-World SEO Face-Off: Grok 3 Against other AI Titans

LLMs are getting pretty good at doing some SEO work, but not all. Hypercomplex SEO tasks like website auditing, keyword research, and backlink monitoring are best left to designated tools like SE Ranking.

But you can get Grok 3 and its rivals to work magic with content creation, topical research, and AI-detection dodging.

Content Creation

We asked Grok 3 (beta), o3 mini, Claude 3.7 Sonnet, and DeepSeek R1 to create an enjoyable SEO-optimized article for the keyword, deepseek ai seo. The prompt was simple; we didn’t give further instructions or use added features like o3-mini’s Deep Research or Grok 3’s “Think” mode.

Here’s how each model performed:

- DeepSeek R1: Took the prompt too literally. Kept on using the exact-match keyword—deepseek ai seo—making the text feel unnatural. The article was structured somewhat well, with clickbait headlines and useful bullet-points, but lacked depth. It also randomly included a meta description at the end but did not suggest a title or URL.

- Grok 3 (beta): Also took the prompt too literally, using the exact match keyword throughout, but the text was more human-like. It produced large walls of text unsuitable for blog writing. It was more thorough and even included step-by-step instructions on how to use DeepSeek to improve SEO. But like DeepSeek R1, Grok 3 lacked a true professional SEO writer’s depth and scope. Very basic approach.

- GPT-o3-mini: The trend of unnatural, exact-match keyword stuffing continues. It even created the headline, “What is DeepSeek AI SEO?” Its content structure mirrored Grok 3’s but was slightly less comprehensive. It also had a less natural tone than Grok 3’s but more than DeepSeek R1’s.

- Claude 3.7 Sonnet: The only AI to use the requested keyword as inspiration instead of stuffing in the exact-match version. Claude’s structure was also great, with catchy, relevant headings, and punchy, useful sections. The text read naturally, but its understanding of the topic was slightly above basic—still a leap above the rest.

Claude 3.7 Sonnet was the clear winner in this test. It was the only model that knew—without having to tell it—that deepseek ai seo is not a natural phrase. Claude found creative ways to work around this awkward keyword and wrote headings that made sense.

But all AI models had cliche introductions, each saying something like “In today’s digital landscape, staying ahead of search engine algorithms is crucial for online visibility.”

Not a single AI deviated from this narrative.

Topical research

Popular SEO YouTuber Julian Goldie also performed some experiments. After testing o3-mini, Grok 3, and Perplexity’s advanced research features, he noticed differences in each model’s depth and breadth.

Here’s how the three AI and their research features stacked up when asked to find the most up-to-date information on Grok 3:

- Grok 3: Goldie was happy about Grok’s report. It pulled the latest info from news articles and tweets, but lacked o3-mini’s depth. Its response was the most to-the-point and took the least time to dish out. It was only about 1,500 words.

- O3-mini: Goldie was the most impressed by o3-mini’s Deep Research feature. He even said it quickly (but not as quickly as Grok 3) dished out a long, 4,700-word research paper-style response. Goldie even said it was “better than what most professionals can come up with.”

- Perplexity: Perplexity spent the most time researching the topic. It came back with about 3,500 words and even generated an infographic comparing Grok 3 to similar models.

According to Goldie, o3-mini and its Deep Research feature was the clear winner for its thorough and unbiased research—great for YMYL topics—but he gave a nod to Perplexity and its impressive output given that it’s free to use.

Goldie said that Grok 3 was decent but its quick, to-the-point reports were unsuitable for the dense topical research needed for SEO content.

AI-detectability

Goldie asked o3-mini, DeepSeek R1, and Grok 3 to create an article that was 100% non-AI-detectable. He tested them with the online tool, ZeroGPT. Here’s what he found:

- o3-mini: Easy to detect, at 78% AI

- Grok (normal mode): Still pretty easy to detect, at 62%

- Grok 3 (think mode): Much worse than expected, at 95% AI

- DeepSeek: Clearly AI, at 100% detectability

We tested Claude 3.7 Sonnet with the same prompt. It created a surprisingly human-like article about digital minimalism, titled “The lost art of being untouchable.” It got a 24% AI-detectability score, with ZeroGPT suspecting that “some sentences or passages may have been edited by AI.”

AI Showdown Summary Chart: A detailed snapshot

Check out the chart below for a closer look at how Grok 3 stacks up against competing AI titans. It distills our findings into a single snapshot, highlighting which AI excels where for SEO.

Content Creation

Ideal for humanized, lengthy drafts. Occasionally writes punchy content but needs editing for blogs.

To-the-point research. Pulls in recent information efficiently but lacks the depth of o3 mini.

General Reasoning

Takes a versatile problem-solving approach. Strong in logic, game development, and creative tasks beyond technical domains.

AI Detectability

Low detectability, ideal for content marketing where punchy, quick reading is critical.

Content Creation

Best for fast, efficient content. Produces quality articles and reports quickly, though less humanized than Grok 3.

Rapid, extensive research. Generates comprehensive reports in seconds, excels in technical domains.

General Reasoning

Ideal for technical reasoning. Optimized for STEM applications but less versatile than Grok 3.

AI Detectability

Balanced detectability. More detectable than Grok 3 but suitable where slight AI detection is acceptable.

Content Creation

Ideal for basic content needs. Struggles with creating comprehensive, engaging content and uses cliche metaphors.

Great for technical research. Average performer, suitable for technical topics but not broad or fast synthesis.

General Reasoning

Ideal for math, coding, and science. Strong in technical reasoning but lacks broader applicability.

AI Detectability

Highly detectable, not suitable for human-like content needs. Best for non-stealth tasks.

Content Creation

Ideal for concise, factual content. Focuses on accurate, citation-backed answers but lacks creativity for longer-form content.

Great for quick, accurate research. Excels at retrieving up-to-date information with reliable sources, ideal for fact-checking.

General Reasoning

Ideal for straightforward queries. Handles simple reasoning well but struggles with complex, multi-step problems.

AI Detectability

Neutral detectability, suitable for tasks where AI origin isn’t a concern.

Content Creation

Impressive creative writing and storytelling output. Produces highly human-like, engaging text for articles, stories, and scripts.

Gives in-depth analyses. Strong at synthesizing information for detailed reports, though not as fast as o3 mini.

General Reasoning

Ideal for language-based reasoning. Excels in understanding and generating text but less focused on quick technical problem-solving.

AI Detectability

Least detectable, making it ideal for content that needs to pass as human-written.

Ideal for humanized, lengthy drafts. Occasionally writes punchy content but needs editing for blogs.

To-the-point research. Pulls in recent information efficiently but lacks the depth of o3 mini.

Takes a versatile problem-solving approach. Strong in logic, game development, and creative tasks beyond technical domains.

Low detectability, ideal for content marketing where punchy, quick reading is critical.

Best for fast, efficient content. Produces quality articles and reports quickly, though less humanized than Grok 3.

Rapid, extensive research. Generates comprehensive reports in seconds, excels in technical domains.

Ideal for technical reasoning. Optimized for STEM applications but less versatile than Grok 3.

Balanced detectability. More detectable than Grok 3 but suitable where slight AI detection is acceptable.

Ideal for basic content needs. Struggles with creating comprehensive, engaging content and uses cliche metaphors.

Great for technical research. Average performer, suitable for technical topics but not broad or fast synthesis.

Ideal for math, coding, and science. Strong in technical reasoning but lacks broader applicability.

Highly detectable, not suitable for human-like content needs. Best for non-stealth tasks.

Ideal for concise, factual content. Focuses on accurate, citation-backed answers but lacks creativity for longer-form content.

Great for quick, accurate research. Excels at retrieving up-to-date information with reliable sources, ideal for fact-checking.

Ideal for straightforward queries. Handles simple reasoning well but struggles with complex, multi-step problems.

Neutral detectability, suitable for tasks where AI origin isn’t a concern.

Impressive creative writing and storytelling output. Produces highly human-like, engaging text for articles, stories, and scripts.

Gives in-depth analyses. Strong at synthesizing information for detailed reports, though not as fast as o3 mini.

Ideal for language-based reasoning. Excels in understanding and generating text but less focused on quick technical problem-solving.

Least detectable, making it ideal for content that needs to pass as human-written.

This face-off showed that Claude 3.7—not Grok 3—gives the most standout responses. It created the most natural, human-like content and rarely triggered AI detectors.

But we also noticed that each model performs differently under different conditions. Since testing is speculative, we suggest experimenting with each AI yourself to find the best fit.

What Grok 3 means for the future of AI

Musk’s AI accelerated an already rapid pace of AI advancement.

The proof?

Major model releases and updates used to occur every few months, but the pace has quickened.

When we started writing this article, Grok 3 just launched. One week later, it unleashed a controversial voice mode feature that can argue with users and even swear at them. Then Claude 3.7 Sonnet arrived in late February 2025 with its groundbreaking hybrid reasoning approach, and OpenAI released GPT‑4.5 to Pro and Plus users just days after that, with improved emotional intelligence and far fewer hallucinations.

Grok 3’s launch is Elon Musk’s move to claim a piece of the AI market while it’s booming.

And while no single AI model currently excels at everything, each is finding its niche. We expect that trend to continue:

- Grok 3: Generates fast, good (but not great) content with unlimited research. Best for human-like writing that avoids AI detection.

- OpenAI’s o3 family: Top-tier research and technical reasoning, but expensive.

- Claude 3.7 Sonnet: Creates natural-sounding content that rarely triggers AI detectors.

- DeepSeek R1 and Perplexity: Excel in technical domains and providing well-cited research.

The days of “one AI to rule them all” are behind us. And whether Elon Musk’s claim about Grok 3 being the “best AI on Earth” proves true or not is less important than his role in pushing the snowball further down the hill to democratize the AI market.

For digital marketers and SEOs, that’s a win because we get better tools, and more of them—and more competition means friendlier prices.